IBM and CERN are using time-series transformers to model particle collisions at the world’s largest collider; it’s part of a larger goal to turn raw observational data into actionable insights.

Ordinary matter gives shape to everything we know of the world, but it represents just 5% of the universe. The rest is made up of mysterious particles that scientists have termed dark energy and dark matter.

An ongoing search for this missing stuff is taking place in tunnels below the Alps, at CERN’s Large Hadron Collider (LHC). Charged particles are smashed together at near light speed to uncover what the universe is made of and how it works. New particles are created in each collision, and as they interact with the LHC’s detectors, sub-particles are formed and measured.

The Higgs boson, involved in giving all other particles their mass, was discovered this way in 2012. But before collecting the physical proof, scientists ran extensive simulations to design their experiments, interpret the results, and test their hypotheses by comparing simulated outcomes against real-life observations.

CERN generates as much synthetic data from simulations as it does real-life experiments. But simulations are expensive, and only getting more so as the LHC and its detectors are upgraded to learn more about Higgs and improve the odds of finding new particles. Once finished, CERN’s real and surrogate experiments will generate substantially more data and consume substantially more computing power.

To help ease the crunch, IBM recently began working with CERN to apply its time series foundation models to modeling particle collisions. What large language models (LLMs) did for text analysis, IBM is hoping time-series transformers can do for prediction tasks based on real or synthetic data. If an AI model can learn a physical process from a sequence of measurements, rather than statistical calculations, it could pave the way for faster, more powerful predictions in just about any field.

Theoretical physics is IBM’s focus with CERN, but the project has broad relevance to any organization modeling the behavior of complex physical systems. Foundation models have the power to transform raw, high-resolution sensor data into a digital representation of reality that enterprises can mine for new ways of improving their products or operations.

“How can I adjust the manufacturing process to produce more paper or sugar,” said Jayant Kalagnanam, director of AI applications at IBM Research. “How often should I service a machine to prolong its lifespan? And what’s the best way to design an experiment to get the desired results? A foundation model that can ‘learn’ a physical process from observational data lets you ask the operational questions that previously were out of reach.”

Modeling physical processes from observations instead of statistical probabilities

Many organizations are drowning in sensor data. Billions to trillions of highly detailed observations could be turned into valuable insights if only we could see the forest for the trees.

Foundation models are now bringing this clarity to modeling the physical world as they did for modeling natural language and images. IBM was the first to apply transformers to raw time-series data with multiple variables in 2021. The work inspired a wave of similar models but that initial enthusiasm waned after a team from Hong Kong showed that a simple regression model could do better on a range of tasks.

The main flaw of those early time-series transformers was to treat measurements in time the same way as words, the team showed. When each time step is tokenized and fed to the transformer, local context gets lost. The resulting errors are compounded as the number of variables, and risk of meaningless correlations, grows.

This problem had earlier been addressed in adapting transformers to vision tasks like object classification and scene recognition. IBM researchers saw that those same ideas could be applied to time series forecasting.

IBM’s first innovation was to group consecutive time points into one token, just as neighboring pixels in an image are aggregated into “patches” to make the data easier for the transformer to digest. Segmenting and consolidating time steps in this way preserves more local context. It also cuts computation costs, speeding up training and freeing up memory to process more historical data.

IBM’s next breakthrough was to narrow the transformer’s sweeping attention mechanism. Rather than model the interaction of all variables through time, it was enough to just compute their interactions at each time step. These time-synchronized correlations could then be organized into a matrix, where the most meaningful relationships could be extracted.

“Think of it as a graph,” said IBM researcher Vijay Ekambaram. “It’s a map of how the variables are related and influence each other over time.” IBM’s efficient PatchTSMixer time-series model has been shown to outperform other forecasting models by up to 60% while using two to three times less memory.

Learning how sub-atomic particles behave

Predicting how high-energy particles will multiply in a collider is no easy task. Statistical calculations are needed to model the particles produced in the initial collision, and the shower of thousands to millions of secondary particles created in the collider’s detectors later. The fate of each particle must be calculated one by one.

The signal they leave in the detectors are used to infer a target particle’s identity, momentum, and other properties. Simulation software currently provides high-resolution estimates, but researchers hope that foundation models might simplify the computation involved and provide comparable results at least 100 times faster.

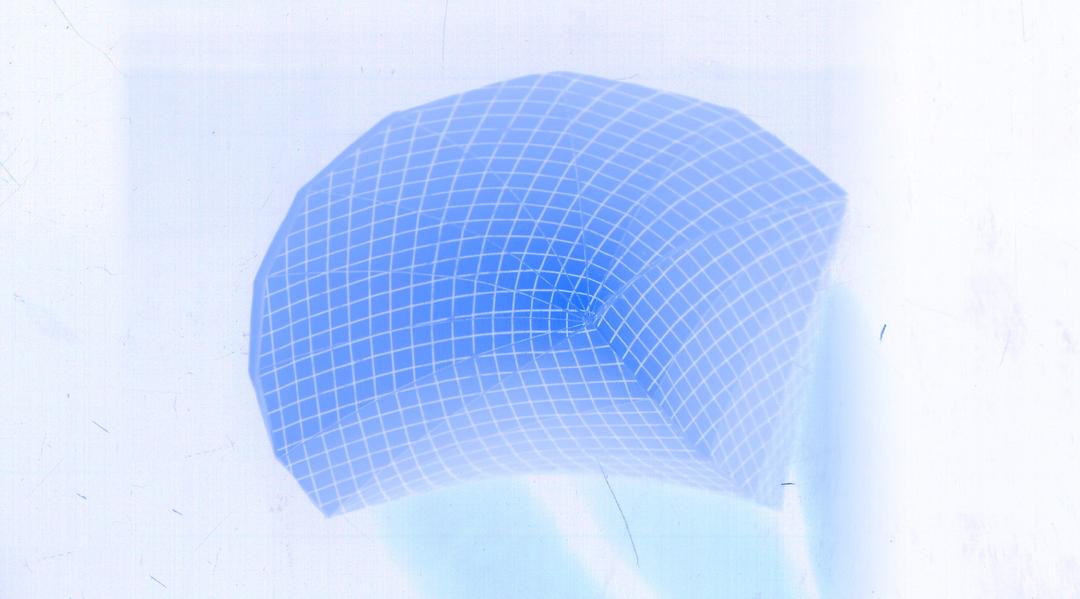

IBM’s work with CERN is focused on the part of the detector known as the calorimeter, which measures the position and energy of shower particles. Using synthetic data from past simulations, IBM trained its PatchTSMixer model to understand how a typical shower unfolds. Values plugged into the model included the angle and energy of the incoming particle, and the amount of energy deposited in the calorimeter by the shower of secondary particles.

When asked to replicate a shower with a set of desired parameters, PatchTSMixer can quickly give estimates of the final energy values. “Each shower event is different, with a randomly determined outcome,” said IBM researcher Kyongmin Yeo. “We’ve adapted our time series foundation model to simulate these random events by learning the probability distribution using a generative method.”

To be useful, the estimates must be fast as well as accurate. “The results so far look very promising,” said Anna Zaborowska, a physicist at CERN. “If we can speed up the simulation of single showers by 100 times that would be brilliant.”

What’s next

CERN has proposed building a $17 billion accelerator three times the size of the LHC and is currently using simulations to design it. If IBM’s fast simulations are successful, and the Future Circular Collider (FCC) is approved, IBM’s models could be used to design experiments in the new collider and interpret the results.

Fast simulations could also play a role in shrinking the LHC’s carbon footprint. “In principle, it could lead to substantial energy savings,” said Zaborowska.

Beyond CERN’s work to unlock the mysteries of the universe, time series foundation models hold the potential to optimize industrial processes in myriad ways to grow revenue and cut costs. Sensors now give enterprises a detailed view of the business, from each step on the assembly line to each power station on a multi-state grid.

Until transformers came along, mining this firehose of sensor data for insights was either impractical or impossible. IBM is now working with several companies in disparate industries to take their sensor data and build proxy models of the manufacturing process that can be interrogated for ways of increasing throughput or reducing energy use.

“These learned proxy models are also a good way to monitor the behavior of a machine or process by comparing observed outcomes against predictions to flag any anomalies,” said Kalagnanam.

In addition to working with enterprises to optimize their operation with time-series transformers, IBM has open-sourced its PatchTST and Patch TSMixer models on Hugging Face, where they’ve been downloaded several thousand times in the last two months.

By: Kim Martineau

Originally published at: IBM