Last month, Indonesia’s previous Minister of Research and Technology boasted that in 2019, Indonesia had overtaken Malaysia and Singapore in the number of published academic articles.

However, scholars argue this ‘productivity’ is the result of a heavily criticised performance measurement system for academics based mostly on output and citations, instead of quality of research.

The output-based performance review has also led to rampant unethical practices among Indonesia’s research community including ‘data dredging’ – inflating sample sizes or tampering with statistical models – to produce research with results that prove their hypotheses, which are more favoured by journals.

Sandersan Onie, a PhD candidate at the University of New South Wales, said during a webinar on research transparency held late last month, that these dishonest practices have increased as researchers aim for publishability over accuracy.

“Experimenters might perform ten different kinds of statistical procedures, but they only report the one most likely to get published and cited. They perform selective reporting,” he said.

More than 1,000 participants from around 30 institutions streamed the webinar titled “Advancing Science in Indonesia: Current Global Research Practices”, the first of its kind for Indonesia.

Simine Vazire, a professor of psychology at the University of California-Davis, was one of the main speakers of the event.

She says that although there are many ways of promoting transparency, generally there are three strategies that can directly boost research credibility.

Pre-registration of the experimentation process

The first method that Vazire suggested was a practice commonly known as pre-registration, which is a time-stamped, read-only version of a research plan created before the study is conducted.

Researchers have to elaborate on the research they plan to undertake before even gathering a sliver of data.

Information may range from the hypotheses proposed, to what statistical method will be used to analyse the data.

The document will then be archived in a system such as the Open Science Framework (OSF), an open-source research management platform.

“If you get an exciting finding with an outcome, you won’t be able to later hide the fact that you also measured twelve other outcomes,” Vazire said.

“You’re committing ahead of time to being public about how many outcomes and treatments you had.”

The second practice is by submitting what’s known as registered reports. Essentially they are a more rigorous and formal form of pre-registration.

Authors send a pre-drafted manuscript explaining the research pitch in more detail to a journal for initial review.

If the editors decide that the study meets all of their criteria for good research design, they give the authors what’s called an ‘in principle’ acceptance. Here, editors accept the articles even before the author collects data. The journal will publish the article regardless of the result of the study, therefore maintaining scientific integrity.

“The nice thing about registered reports is that the decision to accept or reject the paper for publication does not depend on whether your hypothesis was right, whether you got a statistically significant result, or whether the reports are shocking or exciting or likely to get cited a lot,” Vazire said.

These pre-registration practices deter scientists from data dredging and ‘hypothesising after results are known’ (HARKing). This is because if researchers divert from their previously agreed-upon research method, they must justify as to why they did so.

Aside from that, registered reports can solve the problem where research with negative results have a low probability of being published.

Negative results are equally important for science but journals consider them unappealing and attract less citation.

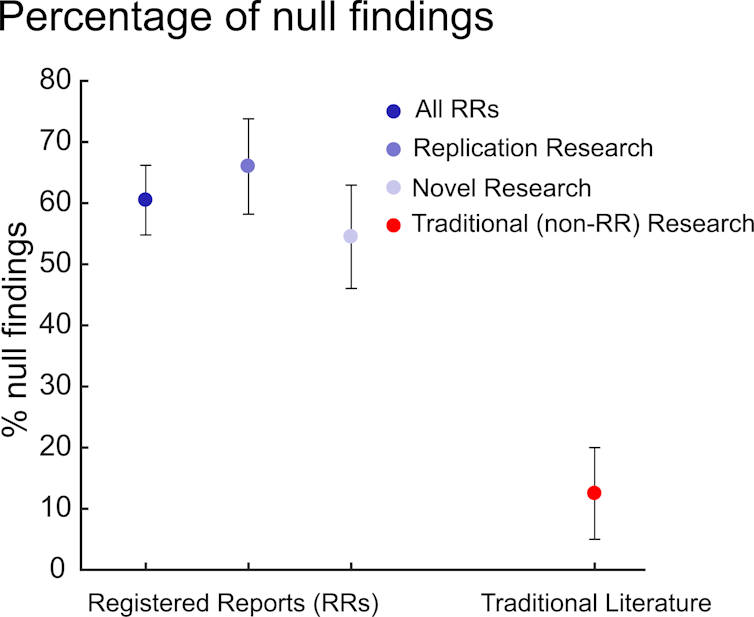

A 2019 study from Cardiff University, for example, highlights how around 60% of research papers published using the registered reports system have negative results.

Compare that with estimates of the current literature, where approximately only 10% of papers published in journals contain negative results, indicating extreme publication bias.

Currently, only 209 journals in the world offer a registered reports mechanism in their publication process, none of them from Indonesia. This amounts to less than 0.01% of the 24,600 peer-reviewed journal titles indexed in Scopus.

Rizqy Amelia Zein, psychology lecturer at Universitas Airlangga who was one of the organisers of the webinar, said in a follow-up interview that Indonesian publications still have a long way to go.

“Most Indonesian journals only care about cosmetic aspects. They are rarely concerned with quality, as their main priority is of course indexing in Scopus,” she said.

Encouraging data sharing through badges

The last method that Vazire recommends is the use of badges by journals to motivate researchers to share data.

The best example is perhaps the Association for Psychological Science. Three of its prestigious journals, including Psychological Science, offer badges for authors and their accepted manuscripts in recognition of open scientific practices.

Three badges are available. One for providing data in a public repository, one for providing open access materials, and another for implementing pre-registration practices.

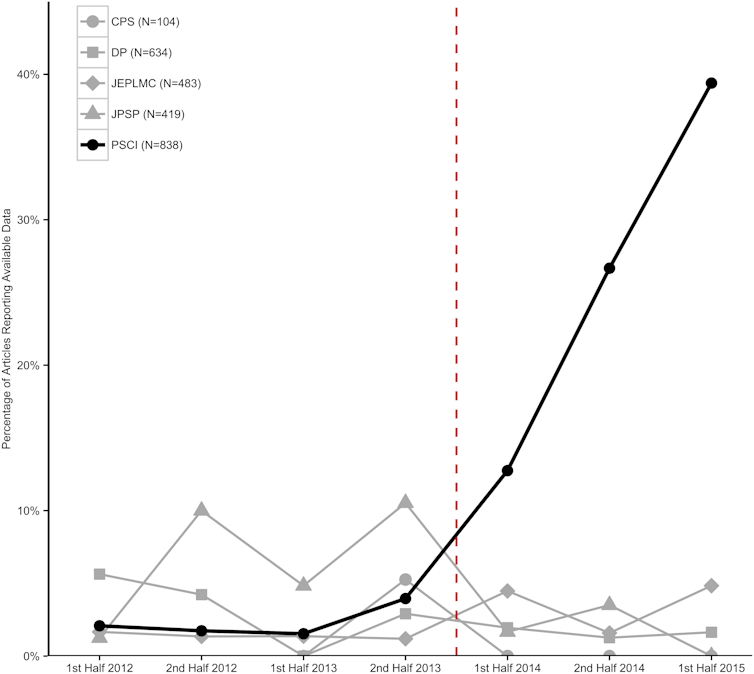

A 2016 American study confirmed that after the implementation of these badges, the number of Psychological Science articles that reported open data skyrocketed.

“I also saw a trickle-down effect of this policy at smaller journals. Although they didn’t have any badges or have policies on open data, we still saw many more authors sharing their data because they understood now people trusted papers more when they had open data,” Vazire said.

A 2017 study by researchers from the Queensland University of Technology suggested that open data badges were the only tested incentive that motivated researchers to share data.

Sandersan says that these transparency and accountability practices are not the ultimate solutions, but they represent steps toward the end goal of producing high-quality credible research.

“It’s very important we not only read the paper, but we know what decisions the researchers make and how they got there. By demanding transparency, researchers can show their whole thought process,” he said.

“If we only incentivise h-index and publication output and all of these things, it will only help us create bad research.”![]()

Luthfi T. Dzulfikar, Associate Editor, The Conversation

This article is republished from The Conversation under a Creative Commons license. Read the original article.