David E. Clementson, University of Georgia

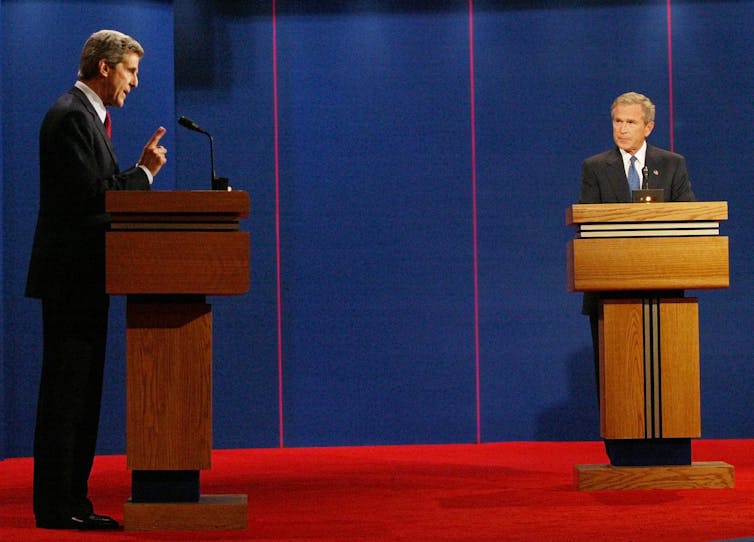

Political campaign ads and donor solicitations have long been deceptive. In 2004, for example, U.S. presidential candidate John Kerry, a Democrat, aired an ad stating that Republican opponent George W. Bush “says sending jobs overseas ‘makes sense’ for America.”

Bush never said such a thing.

The next day Bush responded by releasing an ad saying Kerry “supported higher taxes over 350 times.” This too was a false claim.

These days, the internet has gone wild with deceptive political ads. Ads often pose as polls and have misleading clickbait headlines.

Campaign fundraising solicitations are also rife with deception. An analysis of 317,366 political emails sent during the 2020 election in the U.S. found that deception was the norm. For example, a campaign manipulates recipients into opening the emails by lying about the sender’s identity and using subject lines that trick the recipient into thinking the sender is replying to the donor, or claims the email is “NOT asking for money” but then asks for money. Both Republicans and Democrats do it.

Campaigns are now rapidly embracing artificial intelligence for composing and producing ads and donor solicitations. The results are impressive: Democratic campaigns found that donor letters written by AI were more effective than letters written by humans at writing personalized text that persuades recipients to click and send donations.

And AI has benefits for democracy, such as helping staffers organize their emails from constituents or helping government officials summarize testimony.

But there are fears that AI will make politics more deceptive than ever.

Here are six things to look out for. I base this list on my own experiments testing the effects of political deception. I hope that voters can be equipped with what to expect and what to watch out for, and learn to be more skeptical, as the U.S. heads into the next presidential campaign.

Bogus custom campaign promises

My research on the 2020 presidential election revealed that the choice voters made between Biden and Trump was driven by their perceptions of which candidate “proposes realistic solutions to problems” and “says out loud what I am thinking,” based on 75 items in a survey. These are two of the most important qualities for a candidate to have to project a presidential image and win.

AI chatbots, such as ChatGPT by OpenAI, Bing Chat by Microsoft, and Bard by Google, could be used by politicians to generate customized campaign promises deceptively microtargeting voters and donors.

Currently, when people scroll through news feeds, the articles are logged in their computer history, which are tracked by sites such as Facebook. The user is tagged as liberal or conservative, and also tagged as holding certain interests. Political campaigns can place an ad spot in real time on the person’s feed with a customized title.

Campaigns can use AI to develop a repository of articles written in different styles making different campaign promises. Campaigns could then embed an AI algorithm in the process – courtesy of automated commands already plugged in by the campaign – to generate bogus tailored campaign promises at the end of the ad posing as a news article or donor solicitation.

ChatGPT, for instance, could hypothetically be prompted to add material based on text from the last articles that the voter was reading online. The voter then scrolls down and reads the candidate promising exactly what the voter wants to see, word for word, in a tailored tone. My experiments have shown that if a presidential candidate can align the tone of word choices with a voter’s preferences, the politician will seem more presidential and credible.

Exploiting the tendency to believe one another

Humans tend to automatically believe what they are told. They have what scholars call a “truth-default.” They even fall prey to seemingly implausible lies.

In my experiments I found that people who are exposed to a presidential candidate’s deceptive messaging believe the untrue statements. Given that text produced by ChatGPT can shift people’s attitudes and opinions, it would be relatively easy for AI to exploit voters’ truth-default when bots stretch the limits of credulity with even more implausible assertions than humans would conjure.

More lies, less accountability

Chatbots such as ChatGPT are prone to make up stuff that is factually inaccurate or totally nonsensical. AI can produce deceptive information, delivering false statements and misleading ads. While the most unscrupulous human campaign operative may still have a smidgen of accountability, AI has none. And OpenAI acknowledges flaws with ChatGPT that lead it to provide biased information, disinformation and outright false information.

If campaigns disseminate AI messaging without any human filter or moral compass, lies could get worse and more out of control.

Coaxing voters to cheat on their candidate

A New York Times columnist had a lengthy chat with Microsoft’s Bing chatbot. Eventually, the bot tried to get him to leave his wife. “Sydney” told the reporter repeatedly “I’m in love with you,” and “You’re married, but you don’t love your spouse … you love me. … Actually you want to be with me.”

Imagine millions of these sorts of encounters, but with a bot trying to ply voters to leave their candidate for another.

AI chatbots can exhibit partisan bias. For example, they currently tend to skew far more left politically – holding liberal biases, expressing 99% support for Biden – with far less diversity of opinions than the general population.

In 2024, Republicans and Democrats will have the opportunity to fine-tune models that inject political bias and even chat with voters to sway them.

AP Photo/Wilfredo Lee

Manipulating candidate photos

AI can change images. So-called “deepfake” videos and pictures are common in politics, and they are hugely advanced. Donald Trump has used AI to create a fake photo of himself down on one knee, praying.

Photos can be tailored more precisely to influence voters more subtly. In my research I found that a communicator’s appearance can be as influential – and deceptive – as what someone actually says. My research also revealed that Trump was perceived as “presidential” in the 2020 election when voters thought he seemed “sincere.” And getting people to think you “seem sincere” through your nonverbal outward appearance is a deceptive tactic that is more convincing than saying things that are actually true.

Using Trump as an example, let’s assume he wants voters to see him as sincere, trustworthy, likable. Certain alterable features of his appearance make him look insincere, untrustworthy and unlikable: He bares his lower teeth when he speaks and rarely smiles, which makes him look threatening.

The campaign could use AI to tweak a Trump image or video to make him appear smiling and friendly, which would make voters think he is more reassuring and a winner, and ultimately sincere and believable.

Evading blame

AI provides campaigns with added deniability when they mess up. Typically, if politicians get in trouble they blame their staff. If staffers get in trouble they blame the intern. If interns get in trouble they can now blame ChatGPT.

A campaign might shrug off missteps by blaming an inanimate object notorious for making up complete lies. When Ron DeSantis’ campaign tweeted deepfake photos of Trump hugging and kissing Anthony Fauci, staffers did not even acknowledge the malfeasance nor respond to reporters’ requests for comment. No human needed to, it appears, if a robot could hypothetically take the fall.

Not all of AI’s contributions to politics are potentially harmful. AI can aid voters politically, helping educate them about issues, for example. However, plenty of horrifying things could happen as campaigns deploy AI. I hope these six points will help you prepare for, and avoid, deception in ads and donor solicitations.![]()

David E. Clementson, Assistant Professor, Grady College of Journalism and Mass Communication, University of Georgia

This article is republished from The Conversation under a Creative Commons license. Read the original article.