- Artificial intelligence (AI) can help healthcare systems worldwide cope with increasing stress and avoid waste in healthcare spending due to diminishing capacity.

- AI in healthcare still faces challenges, particularly in low- and middle-income countries, such as unreliable results and inappropriate application in the local context.

- Consistency in approach is key to the effective use of AI in healthcare, including robust data protection, guiding principles, accountability, open-source software and an accessible testing space.

Our populations’ future health will dramatically change in high-, middle- and low-income countries.

Impacts on healthcare from a rapidly changing climate, declining quality of air and water, conflicts and population displacement will coincide with longer-running trends in ageing populations and a shift in the burden of disease from infectious diseases to non-communicable, mostly chronic diseases in lower and middle-income countries where healthcare provision is least developed and most fragile.

These powerful drivers increase the pressure on already stressed healthcare systems, where, on average, 61% of healthcare professionals in the United States and Europe reported understaffing due to resignation and burnout at the beginning of 2023. Likewise, the workforce shortage in low- and middle-income countries is exacerbated by the lack of resources to train and retain medical professionals.

This capacity problem has led to many preventable lapses in spend and care. In the United States alone, avoidable waste in healthcare is estimated to cost around $850 billion or 25% of healthcare spending.

Better disease monitoring, procurement, compliance, diagnosis and fewer errors or negligence could all yield savings – and that is where technology can make a difference.

Prescribing AI to mend healthcare

To date, healthcare’s use of artificial intelligence (AI) has proven transformative in narrow domains, such as digital pathology with recognizing lesions, for instance, on medical images, and research and development, mainly when modelling protein folds. However, embracing system-wide adoption of AI has, up to this point, been elusive.

Nonetheless, with the right leadership, careful future-focused regulation and strategic investment, advancements in AI may significantly increase care capacity in the medium term. These tools will not replace doctors in the foreseeable future but will support and augment decisions made by healthcare professionals. By reducing the potential for human mistakes, AI can help avoid medical errors, reduce inefficiency, and save lives. Put simply, AI-enhanced systems can potentially increase productivity and availability of healthcare.

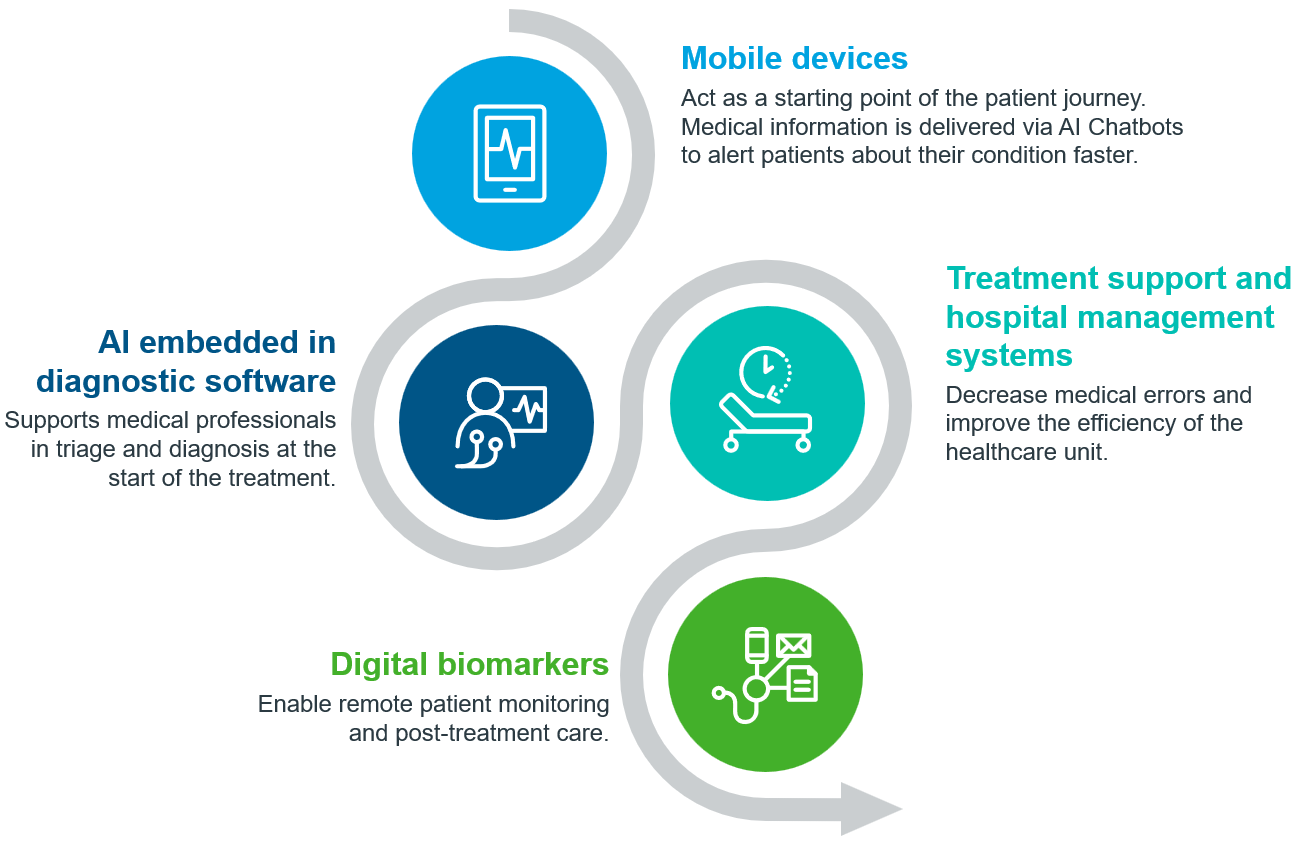

A future patient journey might use AI through various stages – from accessing a health app to diagnostics, treatment and remote patient monitoring – automatic monitoring and triage have demonstrated potential at the beginning of a patient’s journey, which could follow through treatment and post-discharge care.

Strategic, bold adoption of AI could expand access to healthcare in low- and middle-income countries and simultaneously directly address the technology gap between them and high-income countries. The combination of fewer legacy systems and the high availability of mobile devices in low- and middle-income countries brings opportunities to leapfrog to mobile AI platforms for improved and effective population engagement. There are still barriers to address, however.

Some reported challenges that have stopped more AI in healthcare in low- and middle-income countries are low reliability, mixed impacts on workflows, poor usability and a lack of understanding of local context.

In a global setting, the promise of rapid change alone will be insufficient to drive systematic adoption of AI in healthcare without a combination of trust, privacy and regulatory guardrails with education that healthcare providers and policymakers can understand to apply AI appropriately.

Current generative AI models are prone to unpredictability, with little understanding of how to produce reliable outputs. Future development of AI algorithms will train on and handle vast amounts of sensitive patient data, an enormous responsibility given the potential risks if data is mismanaged.

Do no harm

Global pharmaceutical and healthcare regulation has resulted from decades of painstaking evolution and optimization. However, even the best-funded regulatory systems have historically struggled to keep up with the innovation they regulate, as shown by the lag in introducing biosimilars in the United States or regulations on medicine promotion on social media. The pace and scope of AI development are much faster and larger than regulators have had to address. To prevent misuse, guiding principles for building safe AI tools must be agreed upon – a Hippocratic Oath for AI.

During Davos 24, this year’s Annual Meeting in Davos, Switzerland, world leaders must find rapid consensus and focus on building on the 30 points in their 2023 report, The Presidio Recommendations on Responsible Generative AI, to provide healthcare-specific recommendations. Flexible and adaptive guiding principles should foster innovation while ensuring safety, enable human governance at every step of the AI solution’s lifecycle and even account for a future where AI is given increasing independence.

First steps are being taken to codify regulations: the EU AI Act, the US Executive Order on Safe, Trustworthy AI, and the world’s first AI Safety Summit in Bletchley Park, but more should be done to extend these safeguards to low- and middle-income countries and encourage innovation in these countries where the need for care is already beyond capacity.

A unified regulatory landscape, adopted across multiple low- and middle-income countries, for example, through a Pan-African approach, will allow AI-enhanced healthcare to be built from the ground up, ensuring developers can follow a framework inducing a culture of safety, transparency and accountability.

With caution and consensus across stakeholders, AI can realize its significant potential in alleviating capacity constraints. However, the urgency to agree on a shared path cannot be overstated with five steps leaders in the field should follow:

- Build rapid consensus on guiding principles that can be applied faster than formal regulations. High-profile organizations like the World Economic Forum are ideal places for leaders to form agreements.

- Comply with the highest data protection regulations. Healthcare data is being used in new ways, so rigour in protecting patient data will minimize risks and build trust.

- Promote the value of safety, transparency and accountability. Ensure that accelerators, innovation hubs and venture capitalists follow these principles, as they are likely to lay these foundations from the ground up for startups that show the ability to scale.

- Bridge new technologies to low- and middle-income countries. Use open-source software where possible and build offerings with scale and affordability in mind so developing economies can take advantage of emerging technologies.

- Run programmes to introduce AI into clinical practice. Create safe environments in hospitals to test AI tools that have a clear link to increasing capacity.

These steps will begin to foster a culture where the safe development of AI can occur without comprehensive regulations. With the right approach, trust can be built to test these tools in a clinical sandbox and ultimately reduce healthcare providers’ workload in high, low and middle-income countries to begin laying the foundations to bridge the healthcare capacity gap.

Image credits: IQVIA

By: Aurelio Arias (Director, Thought Leadership, IQVIA) and Sarah Rickwood (Vice President, Thought Leadership, IQVIA)

Originally published at: World Economic Forum